Data Catalogs Explained: Use Cases, Benefits & Features

What is a data catalog?

A data catalog is a self-service analytics platform based on metadata (data that describes or summarizes data) which allows data engineers and analytics professionals to easily access and contribute to data assets.

What does a data catalog do?

Data catalogs organize metadata: such as descriptions, ownership, and sensitivity level, so teams can effectively navigate and control data assets.

The easier it is for data analysts to find, access, prepare, and trust data with catalogs, the more likely it is that business intelligence (BI) initiatives and big data projects will be successful.

Data catalogs maintain the inventory of all data assets within an enterprise. Data catalogs enable the organization of ownership, definitions, and relationships for the right data available in the enterprise's data systems.

These catalogs assist data analysts to find the most relevant information they need for a project quickly, while empowering compliance teams to effectively manage access to sensitive data assets.

How do enterprises benefit from data catalogs?

The use of data catalogs is increasing as enterprises are now relying more and more on data analytics and business intelligence (BI) to drive their business strategies and operations.

Cataloging of data makes it simple for teams to navigate and control data assets empowering users to:

-

Leverage metadata and data management tools to create an inventory of data assets within organization, allowing users to find and access information quickly and easily.

-

Have a 360-degree visibility of a data asset profile.

-

Identify and track sensitive information for regulatory purposes.

-

Control who should have data access via policy enforcement and role-based access control to achieve enhanced data security.

Which types of information do data catalogs store?

1. Metadata on dashboards - In large organizations, the team that creates a data dashboard is not the team that will be actually using the dashboard to make business decision making. It is important to organize metadata on the charts, the metrics, and the nuances of each dashboard, so decision makers can quickly identify how they should be interpreting data to make informed business decisions.

2. Metadata on internal business systems - In many scenarios, data analysts start their analytics journey by identifying a business system, and then discovering useful metrics within that system to have better insights in real-time. By storing metadata on the data that resides in each system, teams can more effectively uncover valuable insights.

3. Metadata on data sets - Data sets describe a specific entity within the enterprise. For instance, a 'deals' data set from a customer relationship management (CRM) tool would store information on every deal in the sales pipeline. By organizing metadata on each data set, users can better understand what each entity represents to the business, how entities relate to each other, and how best to drill into the particular attributes of each entity to generate novel real-time insights.

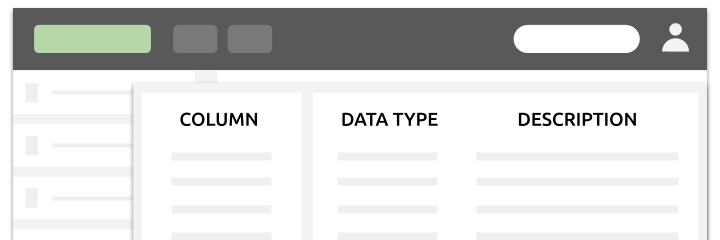

4. Metadata on particular attributes - In the 'deals' data set above, there are likely ten to twenty important attributes for each deal (owner, primary contact, deal size, etc.). Each attribute is represented as a column, a field, or a property in data systems. This is typically the most suitable granularity level of metadata organized within data catalogs. For example, the 'contact_email_address' field in the 'deals' data set could potentially have a description, an owner, and a sensitivity level.

At each level of granularity, companies will track metadata on data lineage (i.e. which system each piece of data came from), when data was last updated, as well as granular relationships that show how entities relate to one another.

How are data catalogs populated with information?

Many cataloging platforms can automatically generate certain metadata from business systems.

-

For example, cataloguing tools will automatically sample a subset of the data in a system, and feed the information into artificial intelligence / machine learning (AI / ML) models to determine whether the data is sensitive (does it look like personally identifiable information) and what it represents (does it look like an email address or a company domain).

-

With automation and effective logic, cataloguing tools can automatically generate and manage certain metadata with little to no human input.

-

However, there will always be aspects of the cataloguing process that are manual. Users will continue to create new custom attributes, or build valuable data sets that never existed before.

-

Companies need to have a strong culture of data governance - combined with policies, procedures, and controls - to ensure changes are represented in the available data catalog for the benefit of the broader organization.

What are the main types of data catalogs?

- Off-the-shelf data catalog tools

- Open source data catalog tools

In both categories, the data catalogs may be delivered as part of a cloud-based data lake or data preparation solution.

However, you may likely to end up with multiple data catalogs as the list of vendors grows. This also tends to makes it more tedious when it comes to plug in a business intelligence solution.

Recommended Read: Data Pipeline vs. ETL: What's the Difference?

Data catalogs for data lakes

-

Data scientists, data stewards and data engineers primarily use data catalogs for data lakes.

-

Since data in a data lake remains raw and unprocessed, without a catalog, data scientists will have to pull datasets, then examine the individual data tables which requires quite a bit of manual labor for preparation time.

-

Whereas with a data catalog, the inherent machine learning capabilities recognize the available data and create a universal schema based on those elements.

-

It is pertinent to mention that a data catalog doesn't completely automate everything, though its ability to intake structured data does feature significant automated processing.

-

However, even with unstructured data, inherent machine learning and artificial intelligence capabilities can enable to learn and provide first-hand recommendations to speed up the processing.

-

Use case of data catalogs for data lakes has limited adaptability among business users and data professionals to access the data and leverage it for their own digital initiatives.

Data catalogs for data warehouses

-

The data warehouses internally maintain a data catalog.

-

In this case, catalog should extract the system-maintained schemas using queries or API connectors.

-

These schemas contain all the metadata you need to lend granular context to your data assets. Data warehouses provide access using a JDBC or ODBC connection, native connectors or low-level client APIs.

7 common use cases for data catalogs

- Modernizing data lakes

- Modernizing cloud

- Improving data curation

- Discovering sensitive data

- Simplifying employee onboarding

- Expediting root-cause analysis

- Streamlining data security and compliance

1) Modernizing data lakes

-

Enterprises' data coming from different sources is stored in raw form within data lakes which make the metadata available for data governance only at a small scale.

-

This restricts the adoption of data across the enterprise because it may be challenging for users to access and analyze the desired data from the data lake.

-

By adding a well-governed data catalog on top of the data lakes, data scientists and business analysts can timely access the desired data with ease.

-

They can not only determine the traceability of data but also transform the data as it flows across various applications.

-

This use case significantly boosts the data lake usage, reduce the duplicate data sets and consequently reduce the overall workloads and the risks of compliance.

2) Modernizing cloud

-

Enterprises are rapidly migrating their cloud services especially in the last few years due to global pandemic situation.

-

This involves challenges such as customized metadata managements tools optimized for each cloud, and need of knowledge about certain type of critical data sets location.

-

To cater this, enterprises use data catalogs to improve the visibility of data across on-premises, cloud as well as hybrid environments.

-

This use case is also helpful to identify critical and sensitive data sets that should be prioritized for speed and reuse.

3) Improving data curation

-

Data curation oversees a collection of data assets available to data users.

-

Cataloging maintains the metadata that is necessary to support various functions on datasets such as browsing, searching, evaluating, accessing, and protecting data.

-

Curating together with cataloging works to meet the data needs of business analysts and data scientists.

-

Cataloging unifies crowdsourcing data curation by bringing data from dispersed sources for better organization and maintenance of datasets.

4) Discovering sensitive data

-

One of the most interesting use case of data catalog is discovering sensitive data that a business didn't know existed.

-

Customer details, payment information and passwords stored in plain text are sometimes needed to be discovered in systems that people have forgotten. Data catalogs play vital role here.

5) Simplifying employee onboarding:

- Onboarding new employees to organizations and team members to new projects becomes faster with data catalogs since they all have easy, fast, and secure access to trusted data with business context.

6) Expediting root-cause analysis:

-

One of the best use cases for data catalog tools is the root cause analysis through data lineage.

-

Once you integrate data quality into your data lineage, it will inform you about your data's quality throughout its life cycle, from its creation to its storage and usage in business process.

-

You can use data lineage to trace back the erroneous or low-quality data to its source and proactively correct it.

7) Streamlining data security and compliance:

- Data catalogs provide the simplest way to streamline the data security and compliance across the organizations.

What features should a data catalog offer?

Search and discovery

A data catalog should have flexible searching and filtering options to allow users to quickly reach relevant data sets for data science, analytics and data engineering.

The catalog should be able to browse metadata based on a technical hierarchy of data assets enabling users to enter technical information, user defined tags, or business terms to enhance the searching capability.

Metadata harvesting

While designing enterprise data catalog it should be ensured that the catalog can harvest technical metadata from variety of data assets interfaced with enterprise applications, from object storage and self-driving databases to on-premises systems and so on.

Metadata curation

A good data catalog provides a way for subject matter experts to contribute to the business knowledge in the form of an enterprise business glossary, tags, associations, user-defined annotations, classifications, ratings, and so on.

Data intelligence and automation

At large data scales, artificial intelligence (AI) and machine learning techniques are essentially required with data catalogs.

The catalog should be designed in such a way that all the manual and repetitive tasks should be automated with AI and machine learning techniques on the collected metadata.

In addition, AI and machine learning can begin to truly augment capabilities with data, such as providing recommendations about data to data catalog users and the users of other services in modern dataflow platforms.

Enterprise-class capabilities

The enterprise data is important which needs enterprise-class capabilities to utilize it properly, such as identity and access management.

This would mean that customers and partners can contribute towards metadata and also expose data catalog capabilities in their own applications via REST API.

Within an enterprise, the data catalog should become a de-facto system to furnish abstraction across all of your persistence layers like object store, databases, data lakes, data warehouse, and for querying services (using SQL, for instance) that work across all of your data assets.

It is pertinent to mentioned that data catalog is no longer a nice to have requirement in an enterprise, it's a necessity now.

What are some popular data catalog tools?

-

Amazon Web Services (AWS)

-

Aginity Pro

-

Alation Data Catalog

-

Data.World

-

Erwin Data Catalog

-

Alex Data Marketplace

-

Collibra Catalog

-

Denodo Platform

-

Alteryx Connect

-

Zaloni Arena

-

Infogix Data360 Govern

-

Anzo by Cambridge Semantics

-

Cloudera Navigator

-

Talend Data Catalog

-

Watson Knowledge Catalog (IBM)

-

Informatica Enterprise Data Catalog

-

Qlik Catalog (Qlik Data Catalyst)

-

SAP Data Intelligence

-

Tableau Catalog

Open-source data catalog tools are typically ones built by big-tech companies as their own data discovery and cataloging solutions and later open-sourced for external teams.

Examples include:

-

Amundsen by Lyft

-

LinkedIn DataHub

-

Apache Atlas

-

Netflix Metacat

-

Uber Databook

How do data catalogs support data governance?

Data governance is a set of processes and procedures to ensure availability, usability, integrity and security of the data in enterprise systems throughout the data life cycle. The data catalogs directly or indirectly support following aspects of data governance:

User engagement statistics

-

In some tools, usage statistics are collected from the core business intelligence tools and presented to the data analyst in the data governance tool.

-

These statistics identify the level of engagement of business users with each reporting asset and are used by analysts to determine which reporting assets are gaining traction in the user base and which content is underperforming.

Certification details

-

The Data Governance tool identifies which datasets and visualizations have been certified and tracks ownership and certification changes over time.

-

Certification details can be obtained from the underlying metadata of the BI reporting tool, or certification can be performed directly in the data catalog.

Quality of data

-

In large organizations , data profiling is required by data analysts to establish when data feeds appear to have data quality issues.

-

For example, if today's data load contains incomplete number of rows in the transaction table, an analyst or data engineer should be able to investigate the problem before reports are distributed with incomplete data.

-

Data catalogs also allow analysts to flag qualitative issues with a dataset and track those issues through resolution.

Classification of data

-

Effective governance requires data files and reports to be classified based on data sensitivity, presence of Personally Identifiable Information (PII), and other key metadata.

-

Some data catalogs automate the data classification process when flagging personal data and other sensitive data using machine learning algorithms.

-

Data classification of metadata is necessary to inform the correct use of data to meet regulations such as General Data Protection Regulations (GDPR) and data protection requirements.

-

A data catalog typically provides the ability to augment the basic metadata collected from source systems with required data classification metadata.

Data lineage and visualization

-

Before working with a datasets, data analysts must first understand the origin of the underlying data.

-

Data lineage diagrams provide a visual map of the resources for a given dashboard or dataset. They establish a complete data preparation path for data integration behind the visualization.

-

A detailed data flow diagram creates the necessary business context for an analyst trying to determine whether an existing BI asset has the right information to help answer a specific business question.

Business glossary

-

Unless an organization has a consistent set of definitions for key business metrics and business terms, different analysts will always use different sets of rules to measure the same metric over time.

-

This inconsistency presents the business with a conflicting set of numbers and leads to a lack of confidence in the data.

-

With the help of data catalogs, business analysts maintain an agreed set of definitions for all key metrics along with established ownership of those definitions as part of the business glossary.

Lifecycle management

-

All BI assets including tables, dashboards and reports, must be managed throughout their data life cycle.

-

Before a new dashboard is published to users, it must pass through a process to confirm that it uses certain data and transformation rules that conform to defined set of metric definitions.

-

Over time, as business rules and data sources change, tables and reports that were previously considered the "golden reference" may become outdated and need to be updated or removed.

-

An effective data management tool provides a mechanism with data catalogs to manage the life cycle of all key BI assets.

The complexity of effective metadata management

Managing and organizing metadata throughout the entire modern data stack is critical to scalability of a data-driven enterprise.

However, with the ever-expanding modern data stack, this can quickly become overwhelming.

Here is a quick reminder of how complex data workflows can become:

-

Event-level data attributes are defined and organized using a tracking plan.

-

Event-level data is collected from websites and mobile apps by a customer data platform (CDP) or product analytics tool.

-

Data is collected in business applications - either through manual input or automated interactions.

-

ELT tools extract data from event sources and business applications and sync the data into a data warehouse.

-

The data is stored for processing in a data warehouse.

-

A transformation platform turns raw data into insights in the warehouse.

-

A policy enforcement layer applies role-based data access control to datasets, tables, and fields.

-

Data is turned into dashboards through a visualization solution.

-

2nd party and 3rd party data consumers can consume certain data sets directly via marketplaces with secure sharing capabilities.

-

Reverse ETL solutions activate data back into business applications for process automation.

Data cataloging solutions play a vital role and focus on three aspects of complex workflows:

-

When data is at rest in a data warehouse (or a data lake, or a database), the data catalog can become an effective source of authenticating metadata.

-

When data is turned into dashboards, data catalogs can annotate the key metrics and insights that have been created.

-

When data is shared with 2nd and 3rd parties, data catalogs can offer a simple way of organizing and sharing metadata on what information is available to be consumed or purchased.

Without a clear way to integrate metadata throughout the remaining components of the modern data stack, finding and leveraging data can rapidly become unwieldy.

We believe ELT and Reverse ETL solutions have an important role to play in metadata management using data catalogs.

-

ETL solutions produce highly valuable metadata.

-

Not only do ELT solutions have direct connections into event collection tools and business applications, but they already schematize and annotate information for analytics in the warehouse.

-

ELT tools are well positioned to sync valuable metadata automatically to data catalog solutions, reducing manual efforts, and helping to integrate metadata throughout the entire modern data stack.

-

Reverse ETL solutions must be able to consume and deliver metadata.

-

For Reverse ETL solutions, it is critical to not only sync raw attributes from the warehouse back into operational tools, but to also leverage, and include, key metadata as well.

-

Similar to how data visualization tools need to respect policy enforcement and role-based access control, Reverse ETL solutions need to ensure data is discoverable and accessible, but controlled at the same time.